Python實現(xiàn)Keras搭建神經(jīng)網(wǎng)絡(luò)訓(xùn)練分類模型教程

我就廢話不多說了,大家還是直接看代碼吧~

注釋講解版:

# Classifier exampleimport numpy as np# for reproducibilitynp.random.seed(1337)# from keras.datasets import mnistfrom keras.utils import np_utilsfrom keras.models import Sequentialfrom keras.layers import Dense, Activationfrom keras.optimizers import RMSprop# 程序中用到的數(shù)據(jù)是經(jīng)典的手寫體識別mnist數(shù)據(jù)集# download the mnist to the path if it is the first time to be called# X shape (60,000 28x28), y# (X_train, y_train), (X_test, y_test) = mnist.load_data()# 下載minst.npz:# 鏈接: https://pan.baidu.com/s/1b2ppKDOdzDJxivgmyOoQsA# 提取碼: y5ir# 將下載好的minst.npz放到當前目錄下path=’./mnist.npz’f = np.load(path)X_train, y_train = f[’x_train’], f[’y_train’]X_test, y_test = f[’x_test’], f[’y_test’]f.close()# data pre-processing# 數(shù)據(jù)預(yù)處理# normalize# X shape (60,000 28x28),表示輸入數(shù)據(jù) X 是個三維的數(shù)據(jù)# 可以理解為 60000行數(shù)據(jù),每一行是一張28 x 28 的灰度圖片# X_train.reshape(X_train.shape[0], -1)表示:只保留第一維,其余的緯度,不管多少緯度,重新排列為一維# 參數(shù)-1就是不知道行數(shù)或者列數(shù)多少的情況下使用的參數(shù)# 所以先確定除了參數(shù)-1之外的其他參數(shù),然后通過(總參數(shù)的計算) / (確定除了參數(shù)-1之外的其他參數(shù)) = 該位置應(yīng)該是多少的參數(shù)# 這里用-1是偷懶的做法,等同于 28*28# reshape后的數(shù)據(jù)是:共60000行,每一行是784個數(shù)據(jù)點(feature)# 輸入的 x 變成 60,000*784 的數(shù)據(jù),然后除以 255 進行標準化# 因為每個像素都是在 0 到 255 之間的,標準化之后就變成了 0 到 1 之間X_train = X_train.reshape(X_train.shape[0], -1) / 255X_test = X_test.reshape(X_test.shape[0], -1) / 255# 分類標簽編碼# 將y轉(zhuǎn)化為one-hot vectory_train = np_utils.to_categorical(y_train, num_classes = 10)y_test = np_utils.to_categorical(y_test, num_classes = 10)# Another way to build your neural net# 建立神經(jīng)網(wǎng)絡(luò)# 應(yīng)用了2層的神經(jīng)網(wǎng)絡(luò),前一層的激活函數(shù)用的是relu,后一層的激活函數(shù)用的是softmax#32是輸出的維數(shù)model = Sequential([ Dense(32, input_dim=784), Activation(’relu’), Dense(10), Activation(’softmax’)])# Another way to define your optimizer# 優(yōu)化函數(shù)# 優(yōu)化算法用的是RMSproprmsprop = RMSprop(lr=0.001, rho=0.9, epsilon=1e-08, decay=0.0)# We add metrics to get more results you want to see# 不自己定義,直接用內(nèi)置的優(yōu)化器也行,optimizer=’rmsprop’#激活模型:接下來用 model.compile 激勵神經(jīng)網(wǎng)絡(luò)model.compile( optimizer=rmsprop, loss=’categorical_crossentropy’, metrics=[’accuracy’])print(’Training------------’)# Another way to train the model# 訓(xùn)練模型# 上一個程序是用train_on_batch 一批一批的訓(xùn)練 X_train, Y_train# 默認的返回值是 cost,每100步輸出一下結(jié)果# 輸出的樣式與上一個程序的有所不同,感覺用model.fit()更清晰明了# 上一個程序是Python實現(xiàn)Keras搭建神經(jīng)網(wǎng)絡(luò)訓(xùn)練回歸模型:# https://blog.csdn.net/weixin_45798684/article/details/106503685model.fit(X_train, y_train, nb_epoch=2, batch_size=32)print(’nTesting------------’)# Evaluate the model with the metrics we defined earlier# 測試loss, accuracy = model.evaluate(X_test, y_test)print(’test loss:’, loss)print(’test accuracy:’, accuracy)

運行結(jié)果:

Using TensorFlow backend.Training------------Epoch 1/2 32/60000 [..............................] - ETA: 5:03 - loss: 2.4464 - accuracy: 0.0625 864/60000 [..............................] - ETA: 14s - loss: 1.8023 - accuracy: 0.4850 1696/60000 [..............................] - ETA: 9s - loss: 1.5119 - accuracy: 0.6002 2432/60000 [>.............................] - ETA: 7s - loss: 1.3151 - accuracy: 0.6637 3200/60000 [>.............................] - ETA: 6s - loss: 1.1663 - accuracy: 0.7056 3968/60000 [>.............................] - ETA: 5s - loss: 1.0533 - accuracy: 0.7344 4704/60000 [=>............................] - ETA: 5s - loss: 0.9696 - accuracy: 0.7564 5408/60000 [=>............................] - ETA: 5s - loss: 0.9162 - accuracy: 0.7681 6112/60000 [==>...........................] - ETA: 5s - loss: 0.8692 - accuracy: 0.7804 6784/60000 [==>...........................] - ETA: 4s - loss: 0.8225 - accuracy: 0.7933 7424/60000 [==>...........................] - ETA: 4s - loss: 0.7871 - accuracy: 0.8021 8128/60000 [===>..........................] - ETA: 4s - loss: 0.7546 - accuracy: 0.8099 8960/60000 [===>..........................] - ETA: 4s - loss: 0.7196 - accuracy: 0.8183 9568/60000 [===>..........................] - ETA: 4s - loss: 0.6987 - accuracy: 0.823010144/60000 [====>.........................] - ETA: 4s - loss: 0.6812 - accuracy: 0.826210784/60000 [====>.........................] - ETA: 4s - loss: 0.6640 - accuracy: 0.829711456/60000 [====>.........................] - ETA: 4s - loss: 0.6462 - accuracy: 0.832912128/60000 [=====>........................] - ETA: 4s - loss: 0.6297 - accuracy: 0.836612704/60000 [=====>........................] - ETA: 4s - loss: 0.6156 - accuracy: 0.840513408/60000 [=====>........................] - ETA: 3s - loss: 0.6009 - accuracy: 0.843014112/60000 [======>.......................] - ETA: 3s - loss: 0.5888 - accuracy: 0.845714816/60000 [======>.......................] - ETA: 3s - loss: 0.5772 - accuracy: 0.848715488/60000 [======>.......................] - ETA: 3s - loss: 0.5685 - accuracy: 0.850316192/60000 [=======>......................] - ETA: 3s - loss: 0.5576 - accuracy: 0.853416896/60000 [=======>......................] - ETA: 3s - loss: 0.5477 - accuracy: 0.855517600/60000 [=======>......................] - ETA: 3s - loss: 0.5380 - accuracy: 0.857618240/60000 [========>.....................] - ETA: 3s - loss: 0.5279 - accuracy: 0.860018976/60000 [========>.....................] - ETA: 3s - loss: 0.5208 - accuracy: 0.861719712/60000 [========>.....................] - ETA: 3s - loss: 0.5125 - accuracy: 0.863420416/60000 [=========>....................] - ETA: 3s - loss: 0.5046 - accuracy: 0.865421088/60000 [=========>....................] - ETA: 3s - loss: 0.4992 - accuracy: 0.866921792/60000 [=========>....................] - ETA: 3s - loss: 0.4932 - accuracy: 0.868422432/60000 [==========>...................] - ETA: 3s - loss: 0.4893 - accuracy: 0.869323072/60000 [==========>...................] - ETA: 2s - loss: 0.4845 - accuracy: 0.870323648/60000 [==========>...................] - ETA: 2s - loss: 0.4800 - accuracy: 0.871224096/60000 [===========>..................] - ETA: 2s - loss: 0.4776 - accuracy: 0.871824576/60000 [===========>..................] - ETA: 2s - loss: 0.4733 - accuracy: 0.872825056/60000 [===========>..................] - ETA: 2s - loss: 0.4696 - accuracy: 0.873625568/60000 [===========>..................] - ETA: 2s - loss: 0.4658 - accuracy: 0.874526080/60000 [============>.................] - ETA: 2s - loss: 0.4623 - accuracy: 0.875326592/60000 [============>.................] - ETA: 2s - loss: 0.4600 - accuracy: 0.875627072/60000 [============>.................] - ETA: 2s - loss: 0.4566 - accuracy: 0.876327584/60000 [============>.................] - ETA: 2s - loss: 0.4532 - accuracy: 0.877128032/60000 [=============>................] - ETA: 2s - loss: 0.4513 - accuracy: 0.877528512/60000 [=============>................] - ETA: 2s - loss: 0.4477 - accuracy: 0.878428992/60000 [=============>................] - ETA: 2s - loss: 0.4464 - accuracy: 0.878629472/60000 [=============>................] - ETA: 2s - loss: 0.4439 - accuracy: 0.879129952/60000 [=============>................] - ETA: 2s - loss: 0.4404 - accuracy: 0.880030464/60000 [==============>...............] - ETA: 2s - loss: 0.4375 - accuracy: 0.880730784/60000 [==============>...............] - ETA: 2s - loss: 0.4349 - accuracy: 0.881331296/60000 [==============>...............] - ETA: 2s - loss: 0.4321 - accuracy: 0.882031808/60000 [==============>...............] - ETA: 2s - loss: 0.4301 - accuracy: 0.882732256/60000 [===============>..............] - ETA: 2s - loss: 0.4279 - accuracy: 0.883232736/60000 [===============>..............] - ETA: 2s - loss: 0.4258 - accuracy: 0.883833280/60000 [===============>..............] - ETA: 2s - loss: 0.4228 - accuracy: 0.884433920/60000 [===============>..............] - ETA: 2s - loss: 0.4195 - accuracy: 0.884934560/60000 [================>.............] - ETA: 2s - loss: 0.4179 - accuracy: 0.885235104/60000 [================>.............] - ETA: 2s - loss: 0.4165 - accuracy: 0.885435680/60000 [================>.............] - ETA: 2s - loss: 0.4139 - accuracy: 0.886036288/60000 [=================>............] - ETA: 2s - loss: 0.4111 - accuracy: 0.887036928/60000 [=================>............] - ETA: 2s - loss: 0.4088 - accuracy: 0.887437504/60000 [=================>............] - ETA: 2s - loss: 0.4070 - accuracy: 0.887838048/60000 [==================>...........] - ETA: 1s - loss: 0.4052 - accuracy: 0.888238656/60000 [==================>...........] - ETA: 1s - loss: 0.4031 - accuracy: 0.888839264/60000 [==================>...........] - ETA: 1s - loss: 0.4007 - accuracy: 0.889439840/60000 [==================>...........] - ETA: 1s - loss: 0.3997 - accuracy: 0.889640416/60000 [===================>..........] - ETA: 1s - loss: 0.3978 - accuracy: 0.890140960/60000 [===================>..........] - ETA: 1s - loss: 0.3958 - accuracy: 0.890641504/60000 [===================>..........] - ETA: 1s - loss: 0.3942 - accuracy: 0.891142016/60000 [====================>.........] - ETA: 1s - loss: 0.3928 - accuracy: 0.891542592/60000 [====================>.........] - ETA: 1s - loss: 0.3908 - accuracy: 0.892043168/60000 [====================>.........] - ETA: 1s - loss: 0.3889 - accuracy: 0.892443744/60000 [====================>.........] - ETA: 1s - loss: 0.3868 - accuracy: 0.893144288/60000 [=====================>........] - ETA: 1s - loss: 0.3864 - accuracy: 0.893144832/60000 [=====================>........] - ETA: 1s - loss: 0.3842 - accuracy: 0.893845408/60000 [=====================>........] - ETA: 1s - loss: 0.3822 - accuracy: 0.894445984/60000 [=====================>........] - ETA: 1s - loss: 0.3804 - accuracy: 0.894946560/60000 [======================>.......] - ETA: 1s - loss: 0.3786 - accuracy: 0.895347168/60000 [======================>.......] - ETA: 1s - loss: 0.3767 - accuracy: 0.895847808/60000 [======================>.......] - ETA: 1s - loss: 0.3744 - accuracy: 0.896348416/60000 [=======================>......] - ETA: 1s - loss: 0.3732 - accuracy: 0.896648928/60000 [=======================>......] - ETA: 0s - loss: 0.3714 - accuracy: 0.897149440/60000 [=======================>......] - ETA: 0s - loss: 0.3701 - accuracy: 0.897450048/60000 [========================>.....] - ETA: 0s - loss: 0.3678 - accuracy: 0.897950688/60000 [========================>.....] - ETA: 0s - loss: 0.3669 - accuracy: 0.898351264/60000 [========================>.....] - ETA: 0s - loss: 0.3654 - accuracy: 0.898851872/60000 [========================>.....] - ETA: 0s - loss: 0.3636 - accuracy: 0.899252608/60000 [=========================>....] - ETA: 0s - loss: 0.3618 - accuracy: 0.899753376/60000 [=========================>....] - ETA: 0s - loss: 0.3599 - accuracy: 0.900354048/60000 [==========================>...] - ETA: 0s - loss: 0.3583 - accuracy: 0.900654560/60000 [==========================>...] - ETA: 0s - loss: 0.3568 - accuracy: 0.901055296/60000 [==========================>...] - ETA: 0s - loss: 0.3548 - accuracy: 0.901656064/60000 [===========================>..] - ETA: 0s - loss: 0.3526 - accuracy: 0.902156736/60000 [===========================>..] - ETA: 0s - loss: 0.3514 - accuracy: 0.902657376/60000 [===========================>..] - ETA: 0s - loss: 0.3499 - accuracy: 0.902958112/60000 [============================>.] - ETA: 0s - loss: 0.3482 - accuracy: 0.903358880/60000 [============================>.] - ETA: 0s - loss: 0.3459 - accuracy: 0.903959584/60000 [============================>.] - ETA: 0s - loss: 0.3444 - accuracy: 0.904360000/60000 [==============================] - 5s 87us/step - loss: 0.3435 - accuracy: 0.9046Epoch 2/2 32/60000 [..............................] - ETA: 11s - loss: 0.0655 - accuracy: 1.0000 736/60000 [..............................] - ETA: 4s - loss: 0.2135 - accuracy: 0.9389 1408/60000 [..............................] - ETA: 4s - loss: 0.2217 - accuracy: 0.9361 1984/60000 [..............................] - ETA: 4s - loss: 0.2316 - accuracy: 0.9390 2432/60000 [>.............................] - ETA: 4s - loss: 0.2280 - accuracy: 0.9379 3040/60000 [>.............................] - ETA: 4s - loss: 0.2374 - accuracy: 0.9368 3808/60000 [>.............................] - ETA: 4s - loss: 0.2251 - accuracy: 0.9386 4576/60000 [=>............................] - ETA: 4s - loss: 0.2225 - accuracy: 0.9379 5216/60000 [=>............................] - ETA: 4s - loss: 0.2208 - accuracy: 0.9377 5920/60000 [=>............................] - ETA: 4s - loss: 0.2173 - accuracy: 0.9383 6656/60000 [==>...........................] - ETA: 4s - loss: 0.2217 - accuracy: 0.9370 7392/60000 [==>...........................] - ETA: 4s - loss: 0.2224 - accuracy: 0.9360 8096/60000 [===>..........................] - ETA: 4s - loss: 0.2234 - accuracy: 0.9363 8800/60000 [===>..........................] - ETA: 3s - loss: 0.2235 - accuracy: 0.9358 9408/60000 [===>..........................] - ETA: 3s - loss: 0.2196 - accuracy: 0.936510016/60000 [====>.........................] - ETA: 3s - loss: 0.2207 - accuracy: 0.936310592/60000 [====>.........................] - ETA: 3s - loss: 0.2183 - accuracy: 0.936911168/60000 [====>.........................] - ETA: 3s - loss: 0.2177 - accuracy: 0.937711776/60000 [====>.........................] - ETA: 3s - loss: 0.2154 - accuracy: 0.938512544/60000 [=====>........................] - ETA: 3s - loss: 0.2152 - accuracy: 0.939313216/60000 [=====>........................] - ETA: 3s - loss: 0.2163 - accuracy: 0.939013920/60000 [=====>........................] - ETA: 3s - loss: 0.2155 - accuracy: 0.939114624/60000 [======>.......................] - ETA: 3s - loss: 0.2150 - accuracy: 0.939115424/60000 [======>.......................] - ETA: 3s - loss: 0.2143 - accuracy: 0.939816032/60000 [=======>......................] - ETA: 3s - loss: 0.2122 - accuracy: 0.940516672/60000 [=======>......................] - ETA: 3s - loss: 0.2096 - accuracy: 0.940917344/60000 [=======>......................] - ETA: 3s - loss: 0.2091 - accuracy: 0.941118112/60000 [========>.....................] - ETA: 3s - loss: 0.2086 - accuracy: 0.941618784/60000 [========>.....................] - ETA: 3s - loss: 0.2084 - accuracy: 0.941819392/60000 [========>.....................] - ETA: 3s - loss: 0.2076 - accuracy: 0.941820000/60000 [=========>....................] - ETA: 3s - loss: 0.2067 - accuracy: 0.942120608/60000 [=========>....................] - ETA: 3s - loss: 0.2071 - accuracy: 0.941921184/60000 [=========>....................] - ETA: 3s - loss: 0.2056 - accuracy: 0.942321856/60000 [=========>....................] - ETA: 3s - loss: 0.2063 - accuracy: 0.941922624/60000 [==========>...................] - ETA: 2s - loss: 0.2059 - accuracy: 0.942123328/60000 [==========>...................] - ETA: 2s - loss: 0.2056 - accuracy: 0.942223936/60000 [==========>...................] - ETA: 2s - loss: 0.2051 - accuracy: 0.942324512/60000 [===========>..................] - ETA: 2s - loss: 0.2041 - accuracy: 0.942425248/60000 [===========>..................] - ETA: 2s - loss: 0.2036 - accuracy: 0.942626016/60000 [============>.................] - ETA: 2s - loss: 0.2031 - accuracy: 0.942426656/60000 [============>.................] - ETA: 2s - loss: 0.2035 - accuracy: 0.942227360/60000 [============>.................] - ETA: 2s - loss: 0.2050 - accuracy: 0.941728128/60000 [=============>................] - ETA: 2s - loss: 0.2045 - accuracy: 0.941828896/60000 [=============>................] - ETA: 2s - loss: 0.2046 - accuracy: 0.941829536/60000 [=============>................] - ETA: 2s - loss: 0.2052 - accuracy: 0.941730208/60000 [==============>...............] - ETA: 2s - loss: 0.2050 - accuracy: 0.941730848/60000 [==============>...............] - ETA: 2s - loss: 0.2046 - accuracy: 0.941931552/60000 [==============>...............] - ETA: 2s - loss: 0.2037 - accuracy: 0.942132224/60000 [===============>..............] - ETA: 2s - loss: 0.2043 - accuracy: 0.942032928/60000 [===============>..............] - ETA: 2s - loss: 0.2041 - accuracy: 0.942033632/60000 [===============>..............] - ETA: 2s - loss: 0.2035 - accuracy: 0.942234272/60000 [================>.............] - ETA: 1s - loss: 0.2029 - accuracy: 0.942334944/60000 [================>.............] - ETA: 1s - loss: 0.2030 - accuracy: 0.942335648/60000 [================>.............] - ETA: 1s - loss: 0.2027 - accuracy: 0.942236384/60000 [=================>............] - ETA: 1s - loss: 0.2027 - accuracy: 0.942137120/60000 [=================>............] - ETA: 1s - loss: 0.2024 - accuracy: 0.942137760/60000 [=================>............] - ETA: 1s - loss: 0.2013 - accuracy: 0.942438464/60000 [==================>...........] - ETA: 1s - loss: 0.2011 - accuracy: 0.942439200/60000 [==================>...........] - ETA: 1s - loss: 0.2000 - accuracy: 0.942640000/60000 [===================>..........] - ETA: 1s - loss: 0.1990 - accuracy: 0.942840672/60000 [===================>..........] - ETA: 1s - loss: 0.1986 - accuracy: 0.943041344/60000 [===================>..........] - ETA: 1s - loss: 0.1982 - accuracy: 0.943242112/60000 [====================>.........] - ETA: 1s - loss: 0.1981 - accuracy: 0.943242848/60000 [====================>.........] - ETA: 1s - loss: 0.1977 - accuracy: 0.943343552/60000 [====================>.........] - ETA: 1s - loss: 0.1970 - accuracy: 0.943544256/60000 [=====================>........] - ETA: 1s - loss: 0.1972 - accuracy: 0.943644992/60000 [=====================>........] - ETA: 1s - loss: 0.1972 - accuracy: 0.943745664/60000 [=====================>........] - ETA: 1s - loss: 0.1966 - accuracy: 0.943846176/60000 [======================>.......] - ETA: 1s - loss: 0.1968 - accuracy: 0.943746752/60000 [======================>.......] - ETA: 1s - loss: 0.1969 - accuracy: 0.943847488/60000 [======================>.......] - ETA: 0s - loss: 0.1965 - accuracy: 0.943948256/60000 [=======================>......] - ETA: 0s - loss: 0.1965 - accuracy: 0.943848896/60000 [=======================>......] - ETA: 0s - loss: 0.1963 - accuracy: 0.943649568/60000 [=======================>......] - ETA: 0s - loss: 0.1962 - accuracy: 0.943850304/60000 [========================>.....] - ETA: 0s - loss: 0.1965 - accuracy: 0.943751072/60000 [========================>.....] - ETA: 0s - loss: 0.1967 - accuracy: 0.943751744/60000 [========================>.....] - ETA: 0s - loss: 0.1961 - accuracy: 0.943952480/60000 [=========================>....] - ETA: 0s - loss: 0.1957 - accuracy: 0.943953248/60000 [=========================>....] - ETA: 0s - loss: 0.1959 - accuracy: 0.943854016/60000 [==========================>...] - ETA: 0s - loss: 0.1963 - accuracy: 0.943754592/60000 [==========================>...] - ETA: 0s - loss: 0.1965 - accuracy: 0.943655168/60000 [==========================>...] - ETA: 0s - loss: 0.1962 - accuracy: 0.943655776/60000 [==========================>...] - ETA: 0s - loss: 0.1959 - accuracy: 0.943756448/60000 [===========================>..] - ETA: 0s - loss: 0.1965 - accuracy: 0.943757152/60000 [===========================>..] - ETA: 0s - loss: 0.1958 - accuracy: 0.943957824/60000 [===========================>..] - ETA: 0s - loss: 0.1956 - accuracy: 0.943858560/60000 [============================>.] - ETA: 0s - loss: 0.1951 - accuracy: 0.944059360/60000 [============================>.] - ETA: 0s - loss: 0.1947 - accuracy: 0.944060000/60000 [==============================] - 5s 76us/step - loss: 0.1946 - accuracy: 0.9440Testing------------ 32/10000 [..............................] - ETA: 15s 1248/10000 [==>...........................] - ETA: 0s 2656/10000 [======>.......................] - ETA: 0s 4064/10000 [===========>..................] - ETA: 0s 5216/10000 [==============>...............] - ETA: 0s 6464/10000 [==================>...........] - ETA: 0s 7744/10000 [======================>.......] - ETA: 0s 9056/10000 [==========================>...] - ETA: 0s 9984/10000 [============================>.] - ETA: 0s10000/10000 [==============================] - 0s 47us/steptest loss: 0.17407772153392434test accuracy: 0.9513000249862671

補充知識:Keras 搭建簡單神經(jīng)網(wǎng)絡(luò):順序模型+回歸問題

多層全連接神經(jīng)網(wǎng)絡(luò)

每層神經(jīng)元個數(shù)、神經(jīng)網(wǎng)絡(luò)層數(shù)、激活函數(shù)等可自由修改

使用不同的損失函數(shù)可適用于其他任務(wù),比如:分類問題

這是Keras搭建神經(jīng)網(wǎng)絡(luò)模型最基礎(chǔ)的方法之一,Keras還有其他進階的方法,官網(wǎng)給出了一些基本使用方法:Keras官網(wǎng)

# 這里搭建了一個4層全連接神經(jīng)網(wǎng)絡(luò)(不算輸入層),傳入函數(shù)以及函數(shù)內(nèi)部的參數(shù)均可自由修改def ann(X, y): ’’’ X: 輸入的訓(xùn)練集數(shù)據(jù) y: 訓(xùn)練集對應(yīng)的標簽 ’’’ ’’’初始化模型’’’ # 首先定義了一個順序模型作為框架,然后往這個框架里面添加網(wǎng)絡(luò)層 # 這是最基礎(chǔ)搭建神經(jīng)網(wǎng)絡(luò)的方法之一 model = Sequential() ’’’開始添加網(wǎng)絡(luò)層’’’ # Dense表示全連接層,第一層需要我們提供輸入的維度 input_shape # Activation表示每層的激活函數(shù),可以傳入預(yù)定義的激活函數(shù),也可以傳入符合接口規(guī)則的其他高級激活函數(shù) model.add(Dense(64, input_shape=(X.shape[1],))) model.add(Activation(’sigmoid’)) model.add(Dense(256)) model.add(Activation(’relu’)) model.add(Dense(256)) model.add(Activation(’tanh’)) model.add(Dense(32)) model.add(Activation(’tanh’)) # 輸出層,輸出的維度大小由具體任務(wù)而定 # 這里是一維輸出的回歸問題 model.add(Dense(1)) model.add(Activation(’linear’)) ’’’模型編譯’’’ # optimizer表示優(yōu)化器(可自由選擇),loss表示使用哪一種 model.compile(optimizer=’rmsprop’, loss=’mean_squared_error’) # 自定義學(xué)習(xí)率,也可以使用原始的基礎(chǔ)學(xué)習(xí)率 reduce_lr = ReduceLROnPlateau(monitor=’loss’, factor=0.1, patience=10, verbose=0, mode=’auto’, min_delta=0.001, cooldown=0, min_lr=0) ’’’模型訓(xùn)練’’’ # 這里的模型也可以先從函數(shù)返回后,再進行訓(xùn)練 # epochs表示訓(xùn)練的輪數(shù),batch_size表示每次訓(xùn)練的樣本數(shù)量(小批量學(xué)習(xí)),validation_split表示用作驗證集的訓(xùn)練數(shù)據(jù)的比例 # callbacks表示回調(diào)函數(shù)的集合,用于模型訓(xùn)練時查看模型的內(nèi)在狀態(tài)和統(tǒng)計數(shù)據(jù),相應(yīng)的回調(diào)函數(shù)方法會在各自的階段被調(diào)用 # verbose表示輸出的詳細程度,值越大輸出越詳細 model.fit(X, y, epochs=100, batch_size=50, validation_split=0.0, callbacks=[reduce_lr], verbose=0) # 打印模型結(jié)構(gòu) print(model.summary()) return model

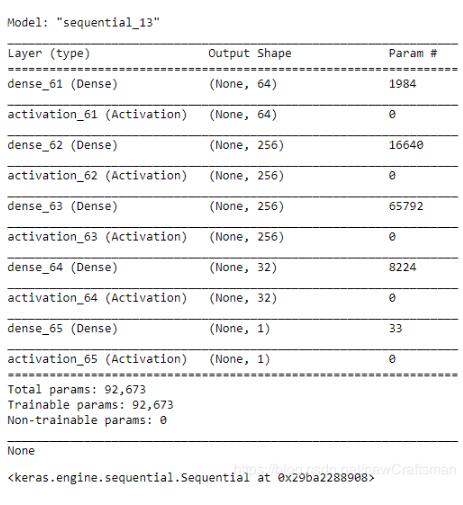

下圖是此模型的結(jié)構(gòu)圖,其中下劃線后面的數(shù)字是根據(jù)調(diào)用次數(shù)而定

以上這篇Python實現(xiàn)Keras搭建神經(jīng)網(wǎng)絡(luò)訓(xùn)練分類模型教程就是小編分享給大家的全部內(nèi)容了,希望能給大家一個參考,也希望大家多多支持好吧啦網(wǎng)。

相關(guān)文章:

1. ASP 信息提示函數(shù)并作返回或者轉(zhuǎn)向2. php使用正則驗證密碼字段的復(fù)雜強度原理詳細講解 原創(chuàng)3. JSP+Servlet實現(xiàn)文件上傳到服務(wù)器功能4. 基于javaweb+jsp實現(xiàn)企業(yè)財務(wù)記賬管理系統(tǒng)5. ASP動態(tài)網(wǎng)頁制作技術(shù)經(jīng)驗分享6. 淺談由position屬性引申的css進階討論7. asp批量添加修改刪除操作示例代碼8. vue項目登錄成功拿到令牌跳轉(zhuǎn)失敗401無登錄信息的解決9. CSS可以做的幾個令你嘆為觀止的實例分享10. vue前端RSA加密java后端解密的方法實現(xiàn)

網(wǎng)公網(wǎng)安備

網(wǎng)公網(wǎng)安備